R. Betser, E. Gofer, M-Y Levi, G. Gilboa, ICLR 2026.

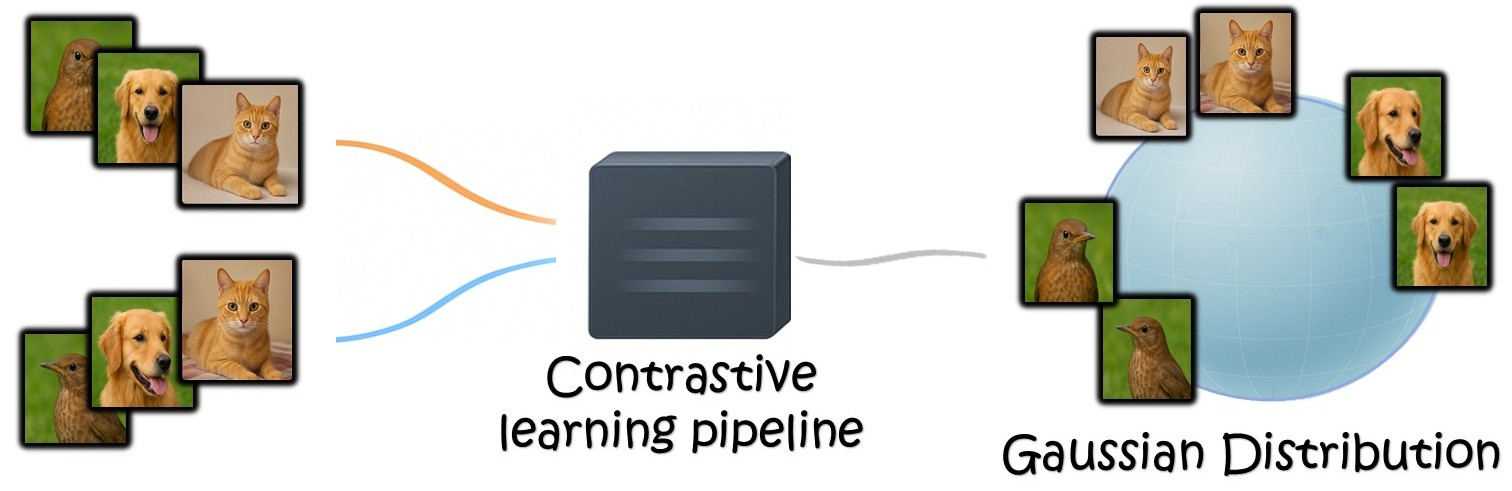

Contrastive learning has been at the bedrock of unsupervised learning in recent years, allowing training with massive unlabeled data for both task-specific and general (foundation) models. A prototypical loss in contrastive training is InfoNCE and its variants. In this paper we show that the embedding of the features which emerge from InfoNCE training can be well approximated by a multivariate Gaussian distribution. We justify this claim by taking two approaches. First, we show that under certain alignment and concentration assumptions, finite projections of a high dimensional representation approach multivariate Gaussian distribution, as the representation dimensions approach infinity.

Next, under less strict assumptions, we show that adding a small regularization term (which vanishes asymptotically) that promotes low feature norm and high feature entropy, we reach similar asymptotic results. We demonstrate experimentally, in a synthetic setting, CIFAR-10 and on pretrained foundation models, that the features indeed follow almost precise Gaussian distribution. One can use the Gaussian model to easily derive analytic expressions in the representation space and to obtain very useful measures, such as likelihood, data entropy and mutual information. Hence, we expect such theoretical grounding to be very useful in various applications involving contrastive learning.

Paper and project page will be published soon.