Eyal Gofer, Guy Gilboa, J. Mathematical Imaging and Vision (JMIV), 2024

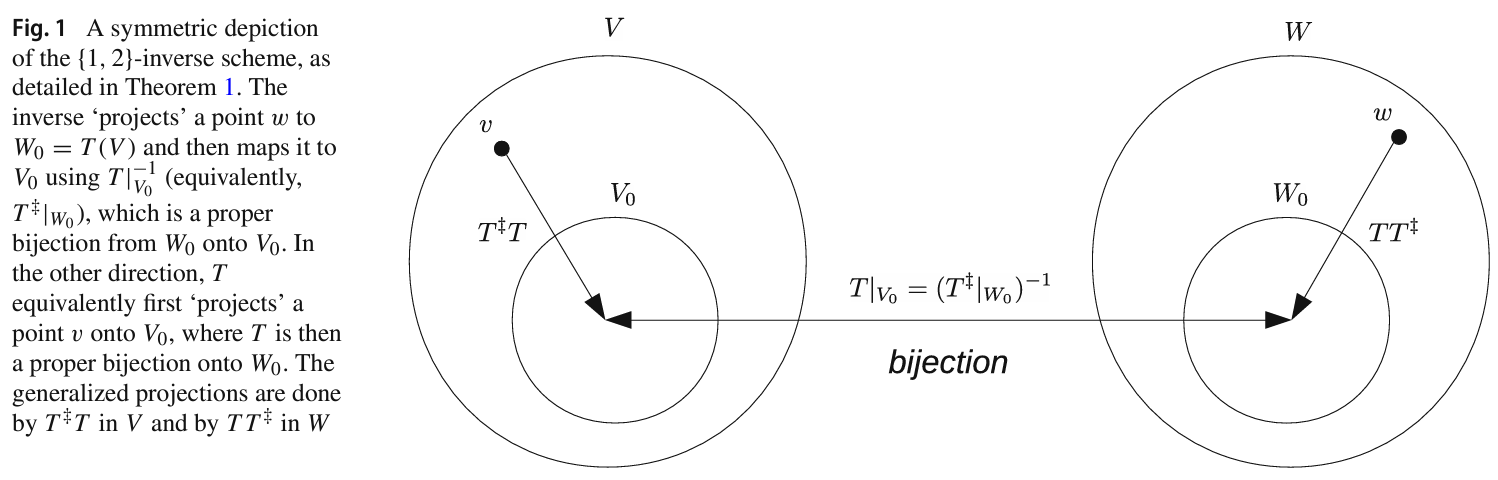

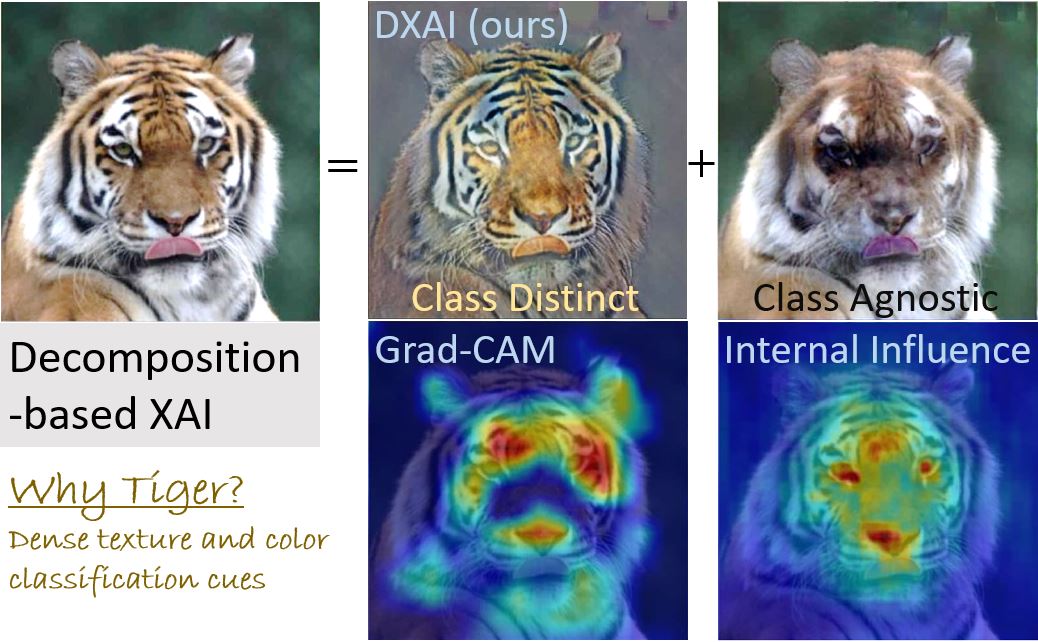

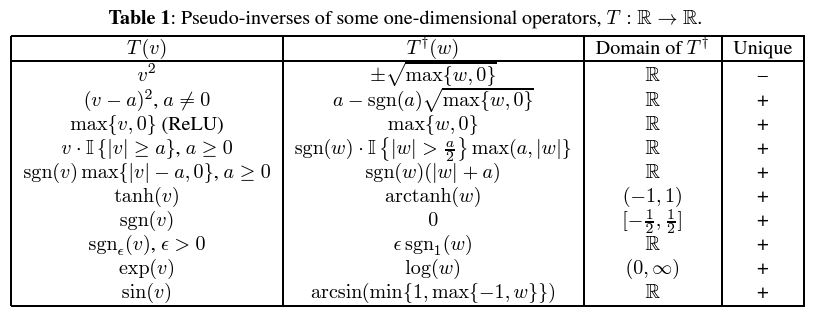

Inversion of operators is a fundamental concept in data processing. Inversion of linear operators is well studied, supported by established theory. When an inverse either does not exist or is not unique, generalized inverses are used. Most notable is the Moore–Penrose inverse, widely used in physics, statistics, and various fields of engineering. This work investigates generalized inversion of nonlinear operators. We first address broadly the desired properties of generalized inverses, guided by the Moore–Penrose axioms. We define the notion for general sets and then a refinement, termed pseudo-inverse, for normed spaces. We present conditions for existence and uniqueness of a pseudo-inverse and establish theoretical results investigating its properties, such as continuity, its value for operator compositions and projection operators, and others. Analytic expressions are given for the pseudo-inverse of some well-known, non-invertible, nonlinear operators, such as hard- or soft-thresholding and ReLU. We analyze a neural layer and discuss relations to wavelet thresholding. Next, the Drazin inverse, and a relaxation, are investigated for operators with equal domain and range. We present scenarios where inversion is expressible as a linear combination of forward applications of the operator. Such scenarios arise for classes of nonlinear operators with vanishing polynomials, similar to the minimal or characteristic polynomials for matrices. Inversion using forward applications may facilitate the development of new efficient algorithms for approximating generalized inversion of complex nonlinear operators.