Adam Wolff, Shachar Praisler, Ilya Tcenov and Guy Gilboa, “Super-Pixel Sampler – a Data-driven Approach for Depth Sampling and Reconstruction”, accepted to ICRA (Int. Conf. on Robotics and Automation) 2020.

See the video of our mechanical prototype

Abstract

Depth acquisition, based on active illumination, is essential for autonomous and robotic navigation. LiDARs (Light Detection And Ranging) with mechanical, fixed, sampling templates are commonly used in today’s autonomous vehicles. An emerging technology, based on solid-state depth sensors, with no mechanical parts, allows fast and adaptive scans.

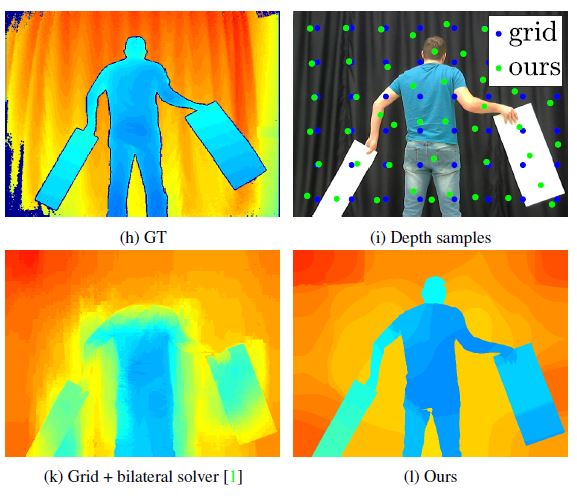

In this paper, we propose an adaptive, image-driven, fast, sampling and reconstruction strategy. First, we formulate a piece-wise planar depth model and estimate its validity for indoor and outdoor scenes. Our model and experiments predict that, in the optimal case, adaptive sampling strategies with about 20-60 piece-wise planar structures can approximate well a depth map. This translates to requiring a single depth sample for every 1200 RGB samples, providing strong motivation to investigate an adaptive framework. We propose a simple, generic, sampling and reconstruction algorithm, based on super-pixels. Our sampling improves grid and random sampling, consistently, for a wide variety of reconstruction methods. We propose an extremely simple and fast reconstruction for our sampler. It achieves state-of-the-art results, compared to complex image-guided depth completion algorithms, reducing the required sampling rate by a factor of 3-4.

A single-pixel depth camera built in our lab illustrates the concept.