D. Rotman, G. Gilboa, “A depth restoration occlusionless temporal dataset”, Int. Conf. On 3D Vision (3DV), Stanford University, 2016.

Abstract:

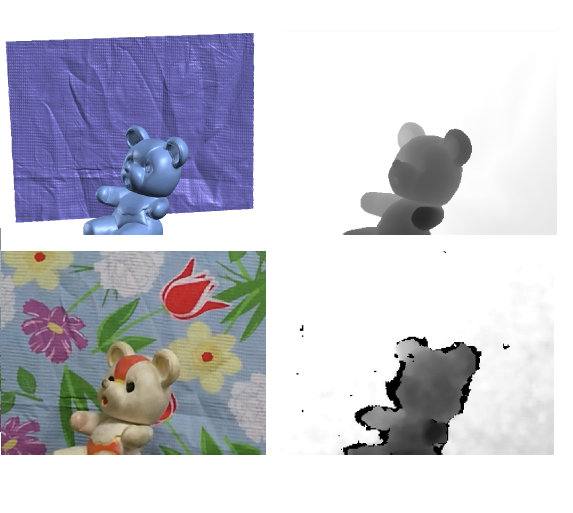

Depth restoration, the task of correcting depth noise and artifacts, has recently risen in popularity due to the increase in commodity depth cameras. When assessing the quality of existing methods, most researchers resort to the popular Middlebury dataset; however, this dataset was not created for depth enhancement, and therefore lacks the option of comparing genuine low-quality depth images with their high-quality, ground-truth counterparts. To address

this shortcoming, we present the Depth Restoration Occlusionless Temporal (DROT) dataset. This dataset offers real depth sensor input coupled with registered pixel-to-pixel color images, and the ground-truth depth to which we wish to compare. Our dataset includes not only Kinect 1 and Kinect 2 data, but also an Intel R200 sensor intended for integration into hand-held devices. Beyond this, we present a new temporal depth-restoration method. Utilizing multiple

frames, we create a number of possibilities for an initial degraded depth map, which allows us to arrive at a more educated decision when refining depth images. Evaluating this method with our dataset shows significant benefits, particularly for overcoming real sensor-noise artifacts