G. Drozdov, Y. Shapiro, G. Gilboa, “Robust recovery of heavily degraded depth measurements”, Int. Conf. On 3D Vision (3DV), Stanford University, 2016.

Abstract:

The revolution of RGB-D sensors is advancing towards mobile platforms for robotics, autonomous vehicles and consumer hand-held devices. Strong pressures on power consumption and system price require new powerful algorithms that can robustly handle very low quality raw data.

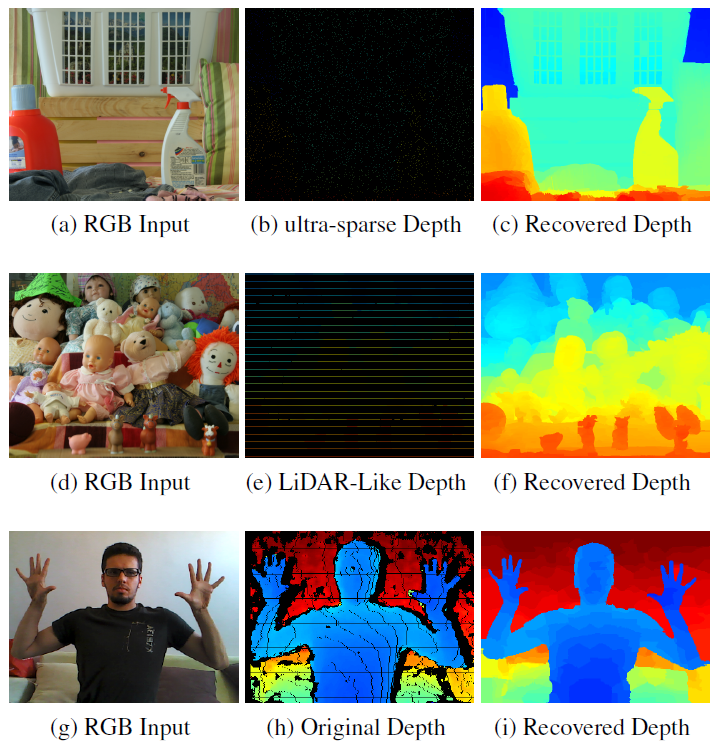

In this paper we demonstrate the ability to reliably recover depth measurements from a variety of highly degraded depth modalities, coupled with standard RGB imagery. The method is based on a regularizer which fuses super-pixel information with the total-generalized-variation (TGV) functional.

We examine our algorithm on several different degradations, including new Intels RealSense hand-held device, LiDAR-type data and ultra-sparse random sampling. In all modalities which are heavily degraded, our robust algorithm achieves superior performance over the state-of-the-art. Additionally, a robust error measure based on Tukeys biweight metric is suggested, which is better at ranking algorithm performance since it does not reward blurry non-physical depth results