Category: Publications

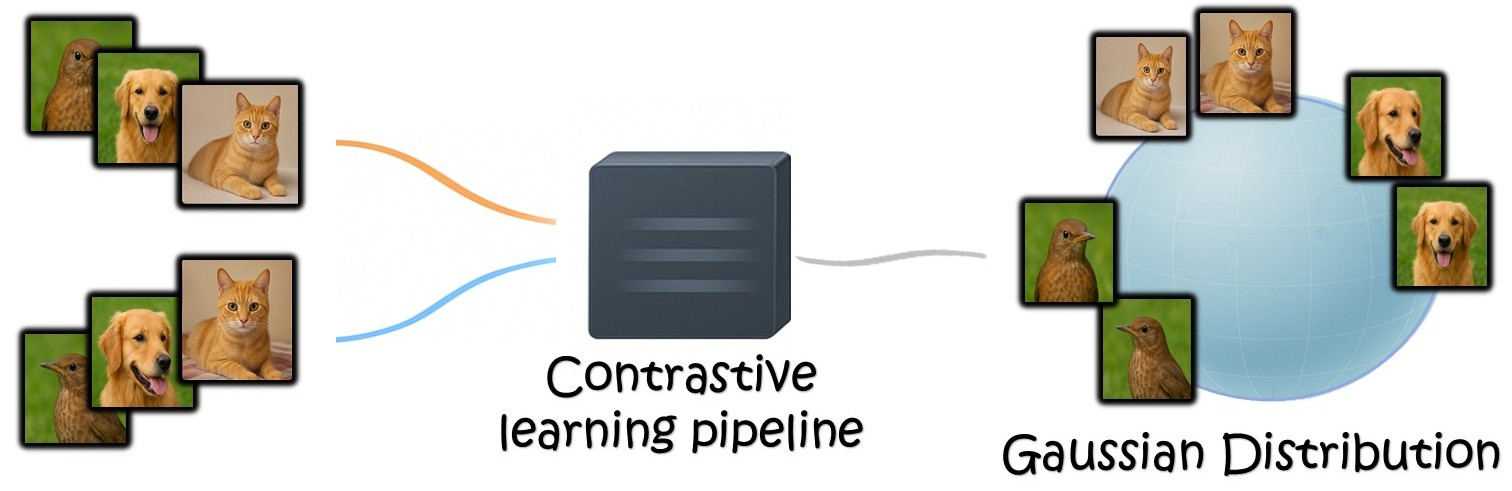

ICLR 2026: InfoNCE Induces Gaussian Distribution

R. Betser, E. Gofer, M-Y Levi, G. Gilboa, ICLR 2026.

Contrastive learning has been at the bedrock of unsupervised learning in recent years, allowing training with massive unlabeled data for both task-specific and general (foundation) models. A prototypical loss in contrastive training is InfoNCE and its variants. In this paper we show that the embedding of the features which emerge from InfoNCE training can be well approximated by a multivariate Gaussian distribution. We justify this claim by taking two approaches. First, we show that under certain alignment and concentration assumptions, finite projections of a high dimensional representation approach multivariate Gaussian distribution, as the representation dimensions approach infinity.

Next, under less strict assumptions, we show that adding a small regularization term (which vanishes asymptotically) that promotes low feature norm and high feature entropy, we reach similar asymptotic results. We demonstrate experimentally, in a synthetic setting, CIFAR-10 and on pretrained foundation models, that the features indeed follow almost precise Gaussian distribution. One can use the Gaussian model to easily derive analytic expressions in the representation space and to obtain very useful measures, such as likelihood, data entropy and mutual information. Hence, we expect such theoretical grounding to be very useful in various applications involving contrastive learning.

Paper and project page will be published soon.

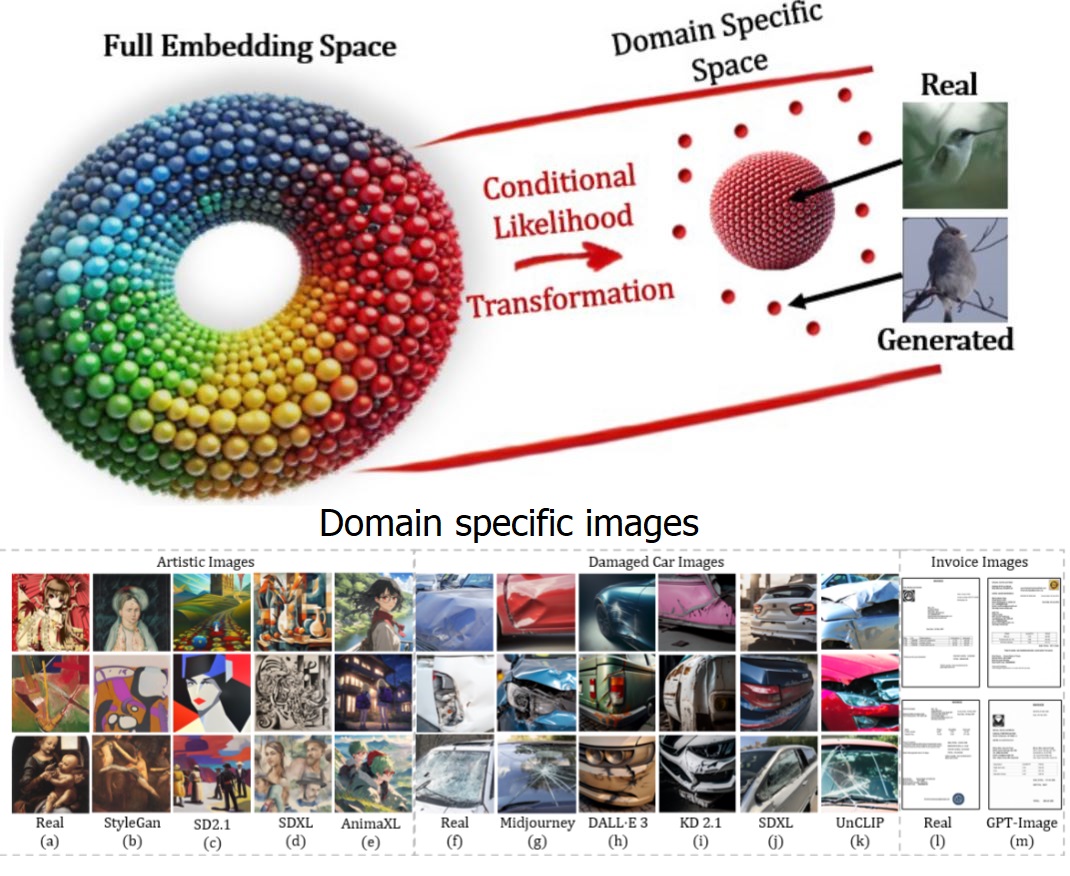

WACV 2026: General and Domain-Specific Zero-shot Detection of Generated Images via Conditional Likelihood

Roy Betser, Omer Hofman, Roman Vainshtein, Guy Gilboa, “General and Domain-Specific Zero-shot Detection of Generated Images via Conditional Likelihood”, accepted to Winter Conference on Applications of Computer Vision (WACV) 2026.

The rapid advancement of generative models, particularly diffusion-based methods, has significantly improved the realism of synthetic images. As new generative models continuously emerge, detecting generated images remains a critical challenge. While fully supervised, and few-shot methods have been proposed, maintaining an updated dataset is time-consuming and challenging. Consequently, zero-shot methods have gained increasing attention in recent years. We find that existing zero-shot methods often struggle to adapt to specific image domains, such as artistic images, limiting their real-world applicability. In this work, we introduce CLIDE, a novel zero-shot detection method based on conditional likelihood approximation. Our approach computes likelihoods conditioned on real images, enabling adaptation across diverse image domains. We extensively evaluate CLIDE, demonstrating state-of-the-art performance on a large-scale general dataset and significantly outperform existing methods in domain-specific cases. These results demonstrate the robustness of our method and underscore the need of broad, domain-aware generalization for the AI-generated image detection task.

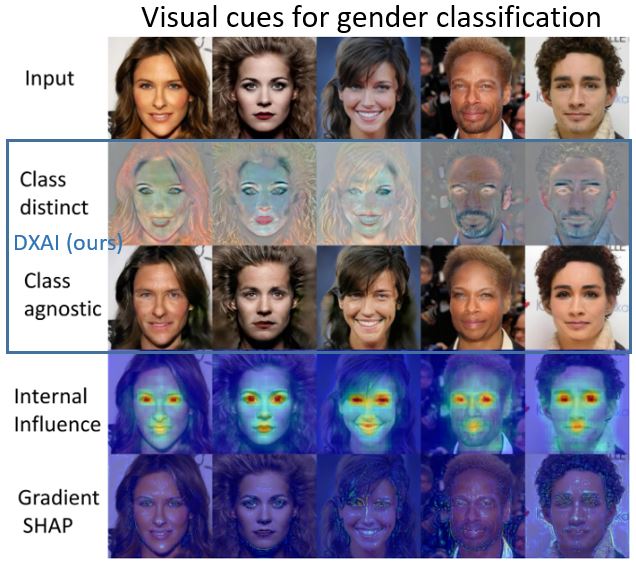

The Visual Computer 2025: DXAI: Explaining Classification by Image Decomposition

Elnatan Kadar, Guy Gilboa, “DXAI: Explaining Classification by Image Decomposition”, accepted to The Visual Computer 2025.

We propose a new way to explain and to visualize neural network classification through a decomposition-based explainable AI (DXAI). Instead of providing an explanation heatmap, our method yields a decomposition of the image into class-agnostic and class-distinct parts, with respect to the data and chosen classifier. Following a fundamental signal processing paradigm of analysis and synthesis, the original image is the sum of the decomposed parts. We thus obtain a radically different way of explaining classification. The class-agnostic part ideally is composed of all image features which do not posses class information, where the class-distinct part is its complementary. This new visualization can be more helpful and informative in certain scenarios, especially when the attributes are dense, global and additive in nature, for instance, when colors or textures are essential for class distinction.

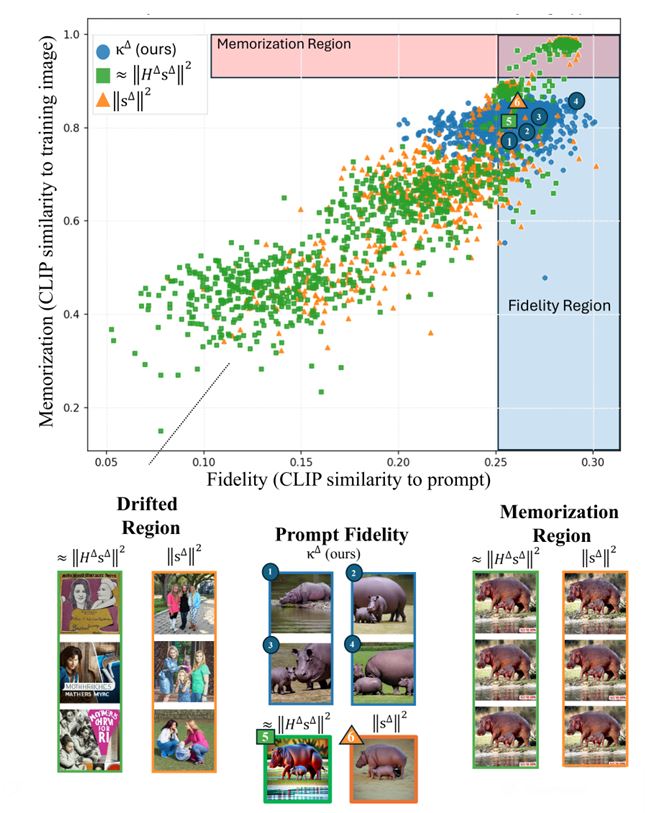

NeuReps 2025 (NeurIPS workshop): Tracking Memorization Geometry throughout the Diffusion Model Generative Process

Jonathan Brokman, Itay Gershon, Omer Hofman, Guy Gilboa, Roman Vainshtein

Memorization in generative text-to-image diffusion models is a phenomenon where instead of valid image generations, the model outputs near-verbatim reproductions of training images. This poses privacy and copyright risks, and remains difficult to prevent without harming prompt fidelity. We present a mid-generation, geometry-informed criterion that detects, and then helps avoid (mitigate), memorized outputs. Our method analyzes the natural image distribution manifold as learnt by the diffusion model. We analyze a memorization criterion that has a local curvature interpretation. Thus we can track the generative process, and our criterion’s trajectory throughout it, to understand typical geometrical structures traversed throughout this process. This is harnessed as a geometry-aware indicator that distinguishes memorized from valid generations. Notably, our criterion uses only the direction of the normalized score field, unlike prior magnitude-based methods; combining direction and magnitude we improve mid-generation detection SOTA by %. Beyond detecting memorization, we use this indicator as a plug-in to a mitigation policy to steer trajectories away from memorized basins while preserving alignment to the text. Empirically, this demonstrates improved fidelity–memorization trade-off over the competitors. By linking memorization to magnitude-invariant geometric signatures of the generative process, our work opens a new direction for understanding—and systematically mitigating—failure modes in diffusion models. Official code: https://bit.ly/4ndeISd

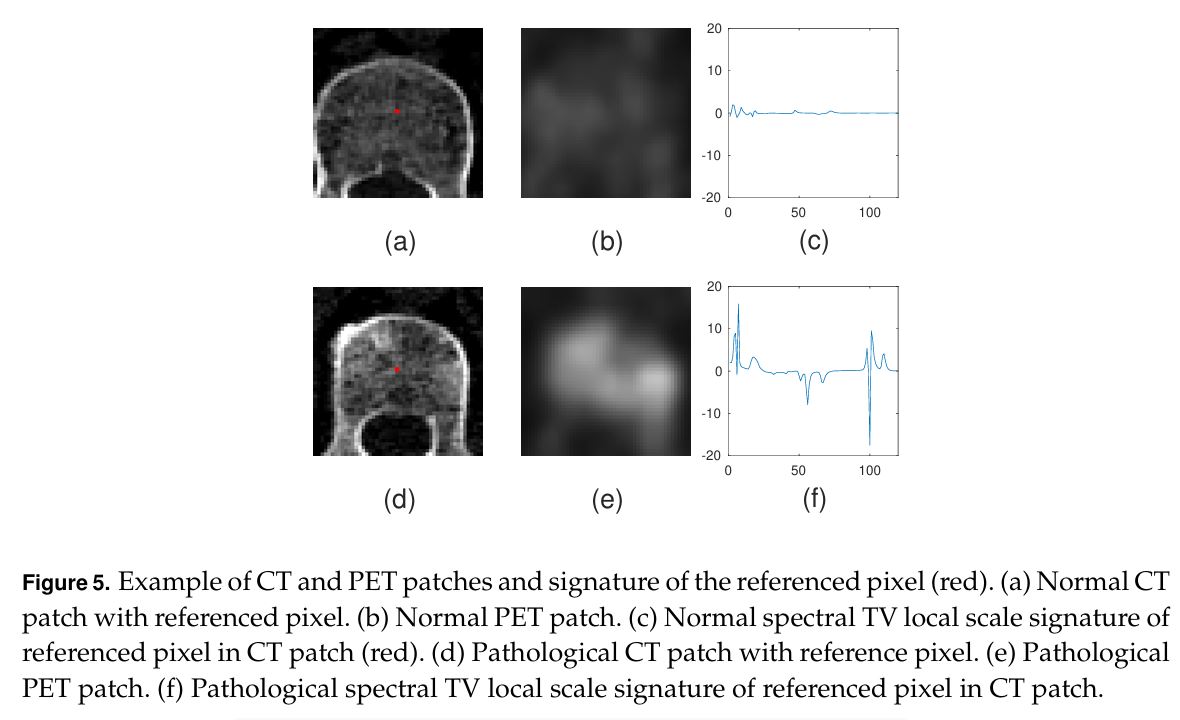

Philosophical Transactions A (2025): Ensemble of Weak Spectral Total Variation Learners: a PET-CT Case Study

Anna Rosenberg, John Kennedy, Zohar Keidar, Yehoshua Y. Zeevi and Guy Gilboa, Philosophical Transactions of the Royal Society A, 2025.

Abstract

Solving computer vision problems through machine learning, one often encounters lack of sufficient training data. To mitigate this, we propose the use of ensembles of weak learners based on spectral total-variation (STV) features (Gilboa G. 2014 A total variation spectral framework for scale and texture analysis. SIAM J. Imaging Sci. 7, 1937–1961. (doi:10.1137/130930704)). The features are related to nonlinear eigenfunctions of the total-variation subgradient and can characterize well textures at various scales. It was shown (Burger M, Gilboa G, Moeller M, Eckardt L, Cremers D. 2016 Spectral decompositions using one-homogeneous functionals. SIAM J. Imaging Sci. 9, 1374–1408. (doi:10.1137/15m1054687)) that, in the one-dimensional case, orthogonal features are generated, whereas in two dimensions the features are empirically lowly correlated. Ensemble learning theory advocates the use of lowly correlated weak learners. We thus propose here to design ensembles using learners based on STV features. To show the effectiveness of this paradigm, we examine a hard real-world medical imaging problem: the predictive value of computed tomography (CT) data for high uptake in positron emission tomography (PET) for patients suspected of skeletal metastases. The database consists of 457 scans with 1524 unique pairs of registered CT and PET slices. Our approach is compared with deep-learning methods and to radiomics features, showing STV learners perform best (AUC=0.87), compared with neural nets (AUC=0.75) and radiomics (AUC=0.79). We observe that fine STV scales in CT images are especially indicative of the presence of high uptake in PET.

ICML 2025: Whitened CLIP as a Likelihood Surrogate of Images and Captions

Roy Betser, Meir-Yossef Levi, Guy Gilboa

Proceedings of the 42nd International Conference on Machine Learning (ICML), 2025

Likelihood approximations for images are not trivial to compute and can be useful in many applications. We examine the use of Contrastive Language-Image Pre-training (CLIP) to assess the likelihood of images and captions. We introduce \textit{Whitened CLIP}, a novel transformation of the CLIP latent space via an invertible linear operation. This transformation ensures that each feature in the embedding space has zero mean, unit standard deviation, and no correlation with all other features, resulting in an identity covariance matrix. We show that the whitened embeddings statistics can be well approximated as a standard normal distribution, thus, the log-likelihood is estimated simply by the square Euclidean norm in the whitened embedding space. The whitening procedure is completely training-free and performed using a pre-computed whitening matrix, hence, is very fast. We present several preliminary experiments demonstrating the properties and applicability of these likelihood scores to images and captions.

ICML 2025: The Double-Ellipsoid Geometry of CLIP

Meir Yossef Levi, Guy Gilboa

Proceedings of the 42nd International Conference on Machine Learning (ICML), 2025

Contrastive Language-Image Pre-Training (CLIP) is highly instrumental in machine learning applications within a large variety of domains.

We investigate the geometry of this embedding, which is still not well understood, and show that text and image reside on linearly separable ellipsoid shells, not centered at the origin. We explain the benefits of having this structure, allowing to better embed instances according to their uncertainty during contrastive training.

Frequent concepts in the dataset yield more false negatives, inducing greater uncertainty.

A new notion of conformity is introduced, which measures the average cosine similarity of an instance to any other instance within a representative data set. We prove this measure can be accurately estimated by simply computing the cosine similarity to the modality mean vector. Furthermore, we find that CLIP’s modality gap optimizes the matching of the conformity distributions of image and text.

ICLR 2025: Manifold Induced Biases for Zero-shot and Few-shot Detection of Generated Images

Abatract:

Distinguishing between real and AI-generated images, commonly referred to as ‘image detection’, presents a timely and significant challenge. Despite extensive research in the (semi-)supervised regime, zero-shot and few-shot solutions have only recently emerged as promising alternatives. Their main advantage is in alleviating the ongoing data maintenance, which quickly becomes outdated due to advances in generative technologies. We identify two main gaps: (1) a lack of theoretical grounding for the methods, and (2) significant room for performance improvements in zero-shot and few-shot regimes. Our approach is founded on understanding and quantifying the biases inherent in generated content, where we use these quantities as criteria for characterizing generated images. Specifically, we explore the biases of the implicit probability manifold, captured by a pre-trained diffusion model. Through score-function analysis, we approximate the curvature, gradient, and bias towards points on the probability manifold, establishing criteria for detection in the zero-shot regime. We further extend our contribution to the few-shot setting by employing a mixture-of-experts methodology. Empirical results across 20 generative models demonstrate that our method outperforms current approaches in both zero-shot and few-shot settings. This work advances the theoretical understanding and practical usage of generated content biases through the lens of manifold analysis.

SSVM 2025: Identifying Memorization of Diffusion Models through p-Laplace Analysis

Jonathan Brokman, Amit Giloni, Omer Hofman, Roman Vainshtein, Hisashi Kojima, and Guy Gilboa, Int. Conf. on Scale Space and Variational Methods, 2025

Abstract:

Diffusion models, today’s leading image generative models, estimate the score function, i.e. the gradient of the log probability of (perturbed) data samples, without direct access to the underlying probability distribution. This work investigates whether the estimated score function can be leveraged to compute higher-order differentials, namely p-Laplace operators. We show here these operators can be employed to identify memorized training data. We propose a numerical p-Laplace approximation based on the learned score functions, showing its effectiveness in identifying key features of the probability landscape. We analyze the structured case of Gaussian mixture models, and demonstrate the results carry-over to image generative models, where memorization identification based on the p-Laplace operator is performed for the first time.