Eyal Gofer, Guy Gilboa, Accepted to SSVM-2023 (oral)

9th International Conference, SSVM 2023, Santa Margherita di Pula, Italy, May 21–25, 2023, Proceedings, Springer LNCS 14009, pp. 29-41, 2023.

Springer conference proceedings

Abstract

The Moore-Penrose inverse is widely used in physics, statistics and various fields of engineering. Among other characteristics, it captures well the notion of inversion of linear operators in the case of overcomplete data. In data science, nonlinear operators are extensively used. In this paper we define and characterize the fundamental properties of a pseudo-inverse for nonlinear operators.

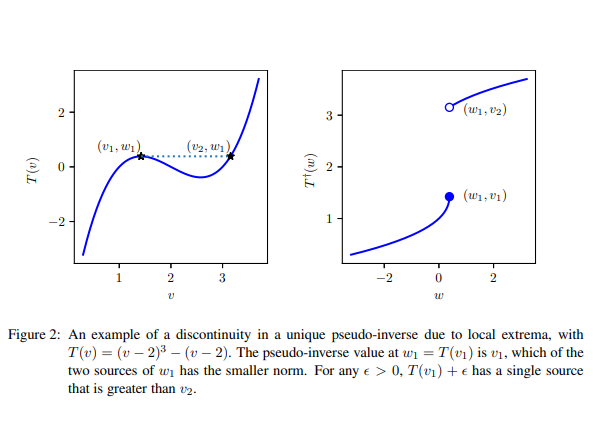

The concept is defined broadly. First for general sets, and then a refinement for normed spaces. Our pseudo-inverse for normed spaces yields the Moore-Penrose inverse when the operator is a matrix. We present conditions for existence and uniqueness of a pseudo-inverse and establish theoretical results investigating its properties, such as continuity, its value for operator compositions and projection operators, and others. Analytic expressions are given for the pseudo-inverse of some well-known, non-invertible, nonlinear operators, such as hard- or soft-thresholding and ReLU. Finally, we analyze a neural layer and discuss relations to wavelet thresholding and to regularized loss minimization.