Ester Hait-Fraenkel, Guy Gilboa, Revealing stable and unstable modes of denoisers through nonlinear eigenvalue analysis. J. Vis. Commun. Image Represent. 75: 103041 (2021)

Ester Hait-Fraenkel, Guy Gilboa, arXiv

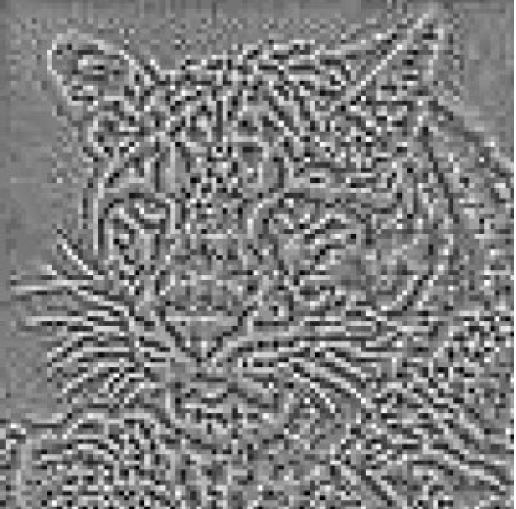

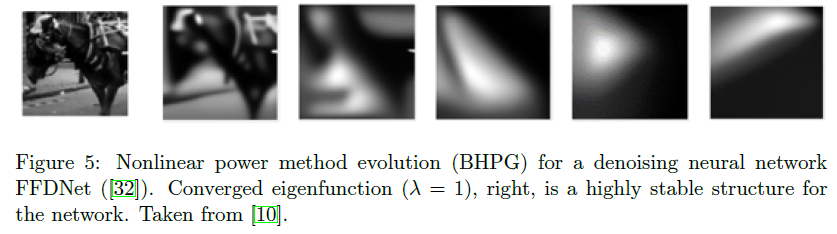

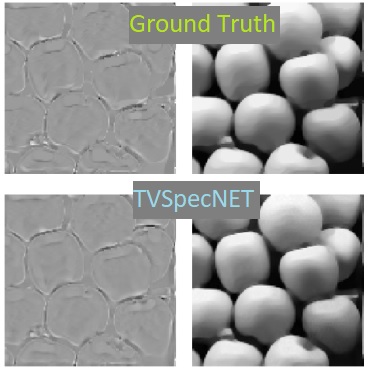

In this paper, we propose to analyze stable and unstable modes of generic image denoisers through nonlinear eigenvalue analysis. We attempt to find input images for which the output of a black-box denoiser is proportional to the input. We treat this as a nonlinear eigenvalue problem. This has potentially wide implications, since most image processing algorithms can be viewed as generic nonlinear operators. We introduce a generalized nonlinear power-method to solve eigenproblems for such black-box operators. Using this method we reveal stable modes of nonlinear denoisers. These modes are optimal inputs for the denoiser, achieving superior PSNR in noise removal. Analogously to the linear case (low-pass-filter), such stable modes are eigenfunctions corresponding to large eigenvalues, characterized by large piece-wise-smooth structures. We also provide a method to generate the complementary, most unstable modes, which the denoiser suppresses strongly. These modes are textures with small eigenvalues. We validate the method using total-variation (TV) and demonstrate it on the EPLL denoiser (Zoran-Weiss). Finally, we suggest an encryption-decryption application.