1. O. Spier, T. Treibitz, G. Gilboa, “In situ target-less calibration of turbid media”, Int. Conf. on Computational Photography (ICCP), Stanford Univ., 2017.

Abstract:

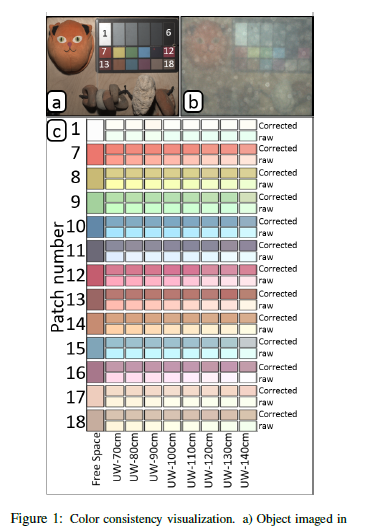

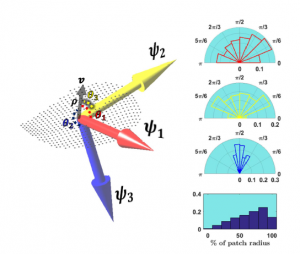

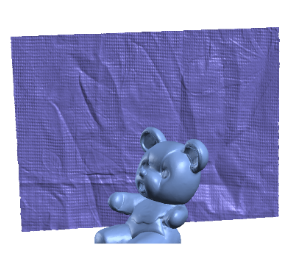

The color of an object imaged in a turbid medium varies with distance and medium properties, deeming color an unstable source of information. Assuming 3D scene structure has become relatively easy to estimate, the main challenge in color recovery is calibrating medium properties in situ, at the time of acquisition. Existing attenuation calibration methods use either color charts, external hardware, or multiple images of an object. Here we show none of these is needed for calibration. We suggest a method for estimating the medium properties (both attenuation and scattering) using only images of backscattered light from the system’s light sources. This is advantageous in turbid media where the object signal is noisy, and also alleviates the need for correspondence matching, which can be difficult in high turbidity. We demonstrate the advantages of our method through simulations and in a real-life experiment at sea.